Automated Quantification of Photoreceptor alteration in macular disease using Optical Coherence Tomography and Deep Learning

Abstract

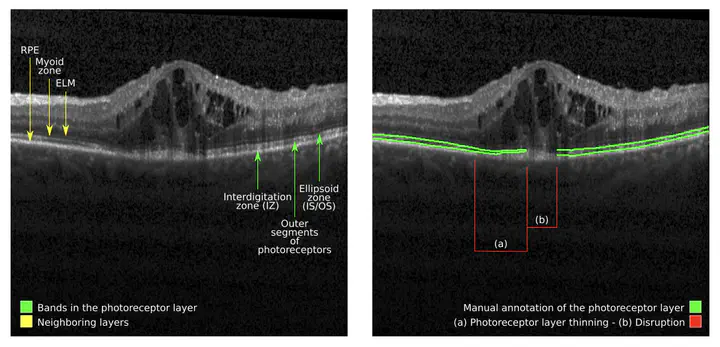

Diabetic macular edema (DME) and retina vein occlusion (RVO) are macular diseases in which central photoreceptors are affected due to pathological accumulation of fluid. Optical coherence tomography allows to visually assess and evaluate photoreceptor integrity, whose alteration has been observed as an important biomarker of both diseases. However, the manual quantification of this layered structure is challenging, tedious and time-consuming. In this paper we introduce a deep learning approach for automatically segmenting and characterising photoreceptor alteration. The photoreceptor layer is segmented using an ensemble of four different convolutional neural networks. En-face representations of the layer thickness are produced to characterize the photoreceptors. The pixel-wise standard deviation of the score maps produced by the individual models is also taken to indicate areas of photoreceptor abnormality or ambiguous results. Experimental results showed that our ensemble is able to produce results in pair with a human expert, outperforming each of its constitutive models. No statistically significant differences were observed between mean thickness estimates obtained from automated and manually generated annotations. Therefore, our model is able to reliable quantify photoreceptors, which can be used to improve prognosis and managment of macular diseases.