Biography

Hi there! I’m José Ignacio Orlando, but everyone call me Nacho :)

I’m an Associate Researcher at CONICET, working as part of Yatiris lab at Pladema Institute in Tandil, Argentina. Furthermore, I’m Director of AI Labs at Arionkoder, as part of a CONICET STAN. You can learn more about what we do in Arionkoder in this link. Last but not least, I’m a part-time professor at Facultad de Ciencias Exactas in UNICEN, Tandil, Argentina, where I teach Fundamentals of Data Science and a Workshop of Applied Mathematics.

My research interests include machine learning and computer vision techniques for medical imaging applications, mostly centered in ophthalmology. But I’m also interested in other applications of machine learning, such as natural language processing. I’m always open to new collaborations and projects, so feel free to reach out to me if you think we could work together!

Apart from PLADEMA (where I did my PhD from 2013 to 2017), my previous affiliations include the Center for Learning and Visual Computing in Paris, France (2013, 6 months working as an intern funded by INRIA), ESAT-PSI in KU Leuven, Belgium (2016, 1 month working as a visiting scholar) and the Christian Doppler Laboratory for Ophthalmic Image Analysis (OPTIMA) from the Medical University of Vienna, Austria (2018-2019, almost 2 years working as a postdoctoral researcher).

- Machine/Deep Learning

- Computer Vision

- Ophthalmology

-

PhD in Computational and Industrial Mathematics, 2017

UNICEN

-

Software Engineer, 2013

UNICEN

Featured Publications

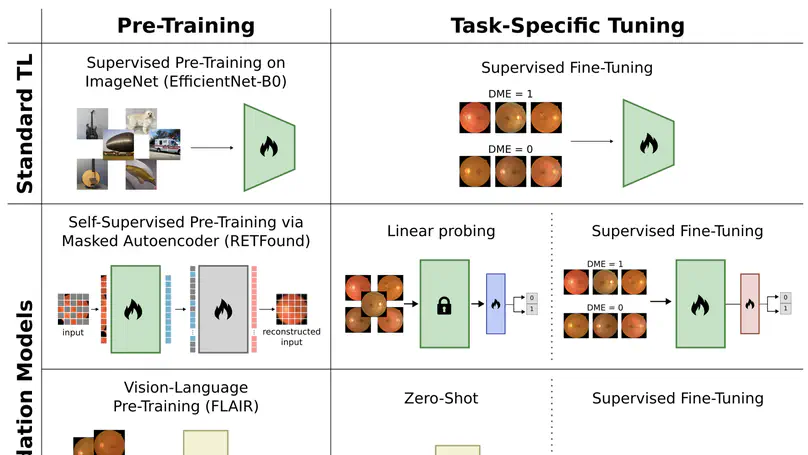

This paper evaluates fundus-specific foundation models for diabetic macular edema detection, comparing different architectures and training strategies to assess their effectiveness in automated DME screening from retinal fundus images.

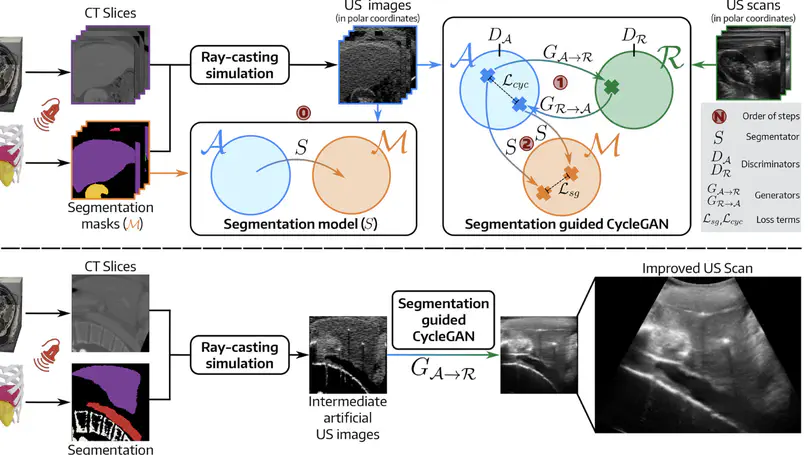

We propose a GAN that improves the realism of US simulations by combining a segmentation-guided loss function with polar coordinates training, leading to more realistic organ boundaries and reduced artifacts.

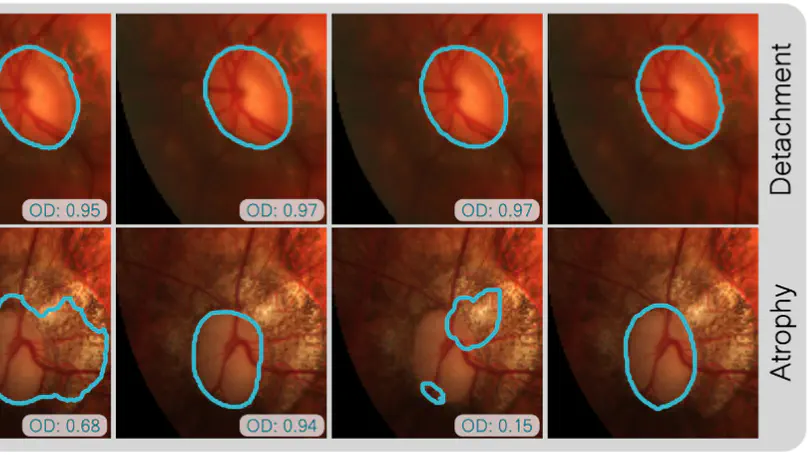

The paper evaluates domain generalization strategies for optic disc and cup segmentation in fundus images, highlighting issues with existing methods when applied to uncropped images, and proposes a semi-supervised learning approach based on the Noisy Student framework to improve performance across diverse datasets.

Publications

Although I try to keep this list updated, you better check my Google Scholar profile for a full list

Projects

Recent Posts

Recent & Upcoming Talks

Teaching

These are all the courses in which I worked / I’m working on, either as a Teaching Assistant or as a Professor in charge.

Professor in:

- Computer Vision based on Artificial Neural Networks

Professor in:

- Machine Learning (Aprendizaje de Máquinas)

- Computer Vision with Artificial Intelligence (Visión Computacional basada en Inteligencia Artificial)

Teaching Assistant in:

- Workshop in Computational Mathematics (Taller de Matemática Computacional). 2015-2017, 2021-2024.

Teaching Assistant in:

- Information Theory (Teoría de la Información). 2013-2015, 2020.

- Medical Imaging Workshop (Taller de Imágenes Médicas). 2015-2017.

- Software Development Methodologies (Metodologías de Desarrollo de Software). 2010-2013. Ad-honorem.

Supervision

Students and Researchers I’ve supervised

Advisor: José Ignacio Orlando.

Topic: Web scraping of images with anomaly detection based filtering.

Advisor: José Ignacio Orlando.

Topic: Implantable collamer lens sizing with machine learning models

Advisor: José Ignacio Orlando. Co-advisor: Ignacio Larrabide

Topic: Deep learning tools for assisting screening of retinal diseases

Advisor: Ignacio Larrabide. Co-advisor: José Ignacio Orlando.

Topic: Machine learning methods for biomedical signal analysis

Advisor: Ignacio Larrabide. Co-advisor: José Ignacio Orlando.

Topic: Characterization of normal asymmetries in homologous brain structures

Advisor: José Ignacio Orlando. Programa de Fortalecimiento a la Ciencia y la Tecnología en las Universidades Nacionales. SECAT, UNICEN.

Topic: Deep learning algorithms for computer assisted diagnostic of diabetic retinopathy from fundus pictures.

Advisor: Ignacio Larrabide. Co-advisor: José Ignacio Orlando. Consejo Interuniversitario Nacional (CIN).

Topic: DeepBrain: Machine Learning applied to study brain morphological alterations in MRI scans.

Advisors: José Ignacio Orlando, Carlos A. Bulant. Facultad de Ciencias Exactas, UNICEN.

Topic: Artery/Vein classification from color fundus photographs for blood flow simulations.

Advisors: José Ignacio Orlando, Ignacio Larrabide. Facultad de Ciencias Exactas, UNICEN.

Topic: Stereoscopic camera simulation using neural networks.

Advisor: Ignacio Larrabide. Co-advisor: José Ignacio Orlando.

Topic: Unpaired generative adversarial models for realistic abdominal ultrasound simulation.

Advisors: José Ignacio Orlando, Mariana del Fresno. Facultad de Ciencias Exactas, UNICEN.

Topic: Retinal blood vessel segmentation in ultra-wise field of view angiographies. Finished with grade 10/10.

Advisors: José Ignacio Orlando, Mariana del Fresno. Facultad de Ciencias Exactas, UNICEN.

Topic: Feature engineering for retinal blood vessel segmentation in fundus images. Finished with grade 10/10.

Advisors: José Ignacio Orlando, Mariana del Fresno. Facultad de Ciencias Exactas, UNICEN.

Topic: Integrating fuzzy C-means and deformable models for 3D medical image segmentation. Finished with grade 10/10.

Experience

General coordination and supervision of all the AI initiatives of the company.

Responsibilities include:

- Meeting with customers and prospects to determine the scope and requirements of their AI projects.

- Modelling AI solutions for customers’ scenarios.

- Coordinating the AI labs, including supervising ML Engineers, Data Engineers and Scientists.

Research project: retinAR: computing and discovering retinal disease biomarkers from fundus images using deep learning

Responsibilities include:

- Conducting research on retinal image analysis with deep learning

Research project: Deep learning for retinal OCT image analysis

Responsibilities include:

- Conducting research on photoreceptor segmentation in OCT images using deep learning

- Collaborating with colleagues from the lab in other retinal imaging projects

- Collaborating with Anna Breger and Martin Ehler from University of Vienna

Research project: Red lesion detection in fundus photographs

Responsibilities include:

- Presenting ungoing research to ESAT-PSI staff.

- Closing a publication.

Research project: Retinal image analysis with machine learning. Supervisor: Prof. Matthew B. Blaschko (INRIA)

Responsibilities include:

- Developing a machine learning algorithm for retinal vessel segmentation in fundus images

Research project: Machine learning for ophthalmic screening and diagnostics from fundus images

Supervisors: Prof. Matthew B. Blaschko (KU Leuven) & Prof. Mariana del Fresno (UNICEN)